|

Ekta Prashnani Research Scientist NVIDIA |

I am a Research Scientist at NVIDIA Research, working on verifying trustworthy use of synthetic content (see Avatar Fingerprinting for verifying the authorized use of synthetic talking-head videos at ECCV'24) and on multi-modal foundation models.

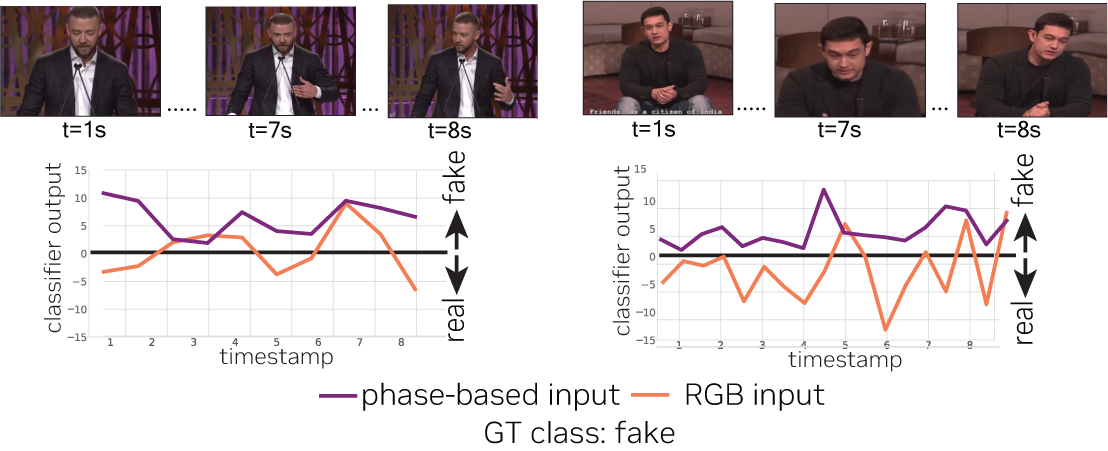

Some past notable works include PhaseForenics, a generalizable, phase-based deepfake detector; PieAPP a perceptually-consistent image error assessment method and accompanying dataset; and

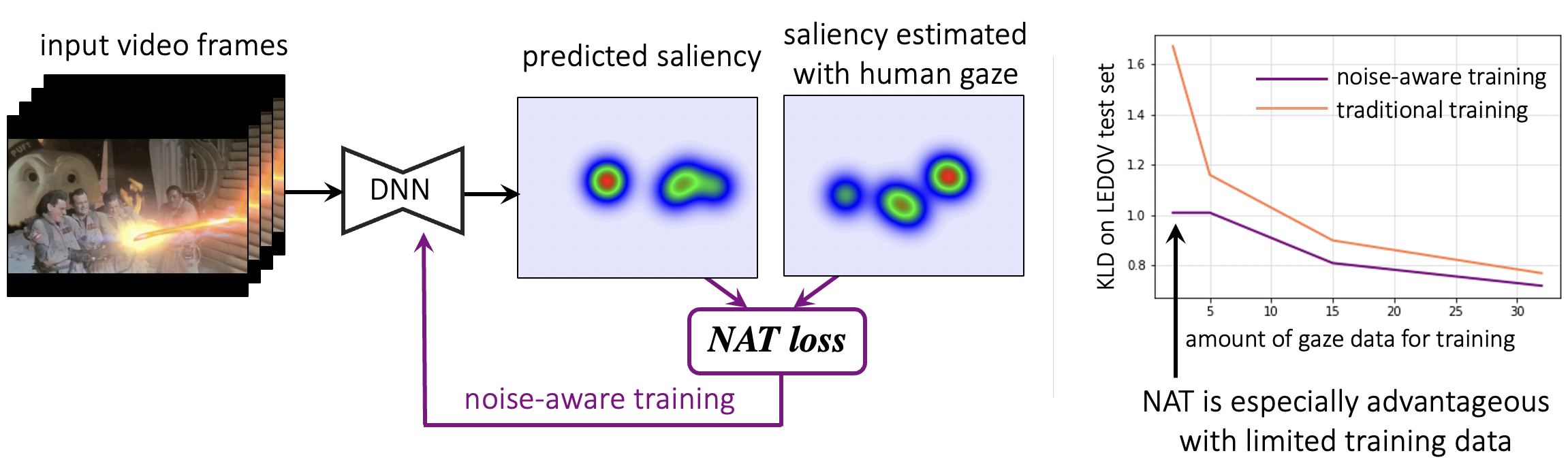

a noise-aware training approach for video saliency.

News

| Jul, 2024: Avatar Fingerprinting, the first work on verifying authorized use of synthetic talking-head videos, is accepted for publication at ECCV (summary video link). |

| Jun, 2024: PhaseForensics (a phase-based deepfake detector) is accepted for publication at the Transactions on Image Processing: stay tuned for updates! |

| Jan, 2024: Leonard Salewski started his internship with me: an exciting start to 2024! |

| Sep, 2023: Our work on Avatar Fingerprinting for verifying the authorized use of synthetic talking-head videos is now available on arXiv. |

| Nov, 2022: Our work on PhaseForensics: A generalizable deepfake detector for phase-based motion analysis is now available on arXiv. |

| Apr, 2022: Started as a Research Scientist in the Human Performance and Experience team at NVIDIA. |

| Feb, 2022: Successfully defended my thesis "Data-driven Methods for Evaluating the Perceptual Quality and Authenticity of Visual Media". |

| Oct, 2021: Participated in ICCV 2021 Doctoral Consortium. |

| Jun, 2021: ECE Dissertation Fellowship by the ECE department at UCSB. |

Publications

| Ekta Prashnani, Koki Nagano, Shalini De Mello, David Leubke, Orazio Gallo, "Avatar Fingerprinting for Authorized Use of Synthetic Talking-Head Videos" European Conference on Computer Vision, 2024. |

Ekta Prashnani, Michael Goebel, B. S. Manjunath, "Generalizable Deepfake Detection with Phase-Based Motion Analysis" IEEE Transactions on Image Processing, 2024.  |

Ekta Prashnani, Orazio Gallo, Joohwan Kim, Josef Spjut, Pradeep Sen, Iuri Frosio, "Noise-Aware Video Saliency Prediction," British Machine Vision Conference, 2021.  |

Ekta Prashnani*, Herbert (Hong) Cai*, Yasamin Mostofi and Pradeep Sen, "PieAPP: Perceptual Image-Error

Assessment through Pairwise Preference," Computer Vision and Pattern Recognition, 2018.  |

| Ekta Prashnani, Maneli Noorkami, Daniel Vaquero and Pradeep Sen, "A Phase-Based Approach for Animating Images Using Video Examples," Computer Graphics Forum, August 2016, Volume 36,

Issue 6.

|

| *joint first authors |